Stravito CTO Jonas Martinsson breaks down why AI hallucinations happen and how you can stop them in this in-depth Q&A.

Why do LLMs "hallucinate"? What causes these kinds of errors?

LLMs hallucinate because they're pattern-matching machines, not knowledge repositories. They predict text based on statistical patterns rather than retrieving verified facts. When uncertain, they can generate coherent completions instead of admitting ignorance.

Their training data compounds this problem. Fiction mixes with fact, and contradictory sources offer different truths. Without fact-checking mechanisms, models blend these contradictions into convincing but potentially false compromises.

In longer contexts, attention mechanism limitations mean they can 'lose track' of earlier information, fabricating details that seem consistent but weren't actually present.

What's the difference between a creative LLM output and a harmful hallucination?

Context and consequence define the line. Creative outputs are valuable when imagination is the goal: brainstorming campaign themes, generating product names, or exploring "what if" scenarios. Harmful hallucinations occur when AI fabricates information in contexts requiring truth, such as market sizing, customer feedback, or competitive intelligence.

The difference is what's at stake. When AI invents a clever tagline, you gain inspiration. When it invents a market trend or customer quote, you risk strategic decisions based on fiction. Trust erodes quickly when stakeholders discover their "data-driven" decisions were built on hallucinations.

On Safeguards

How does Stravito help customers avoid hallucination risks?

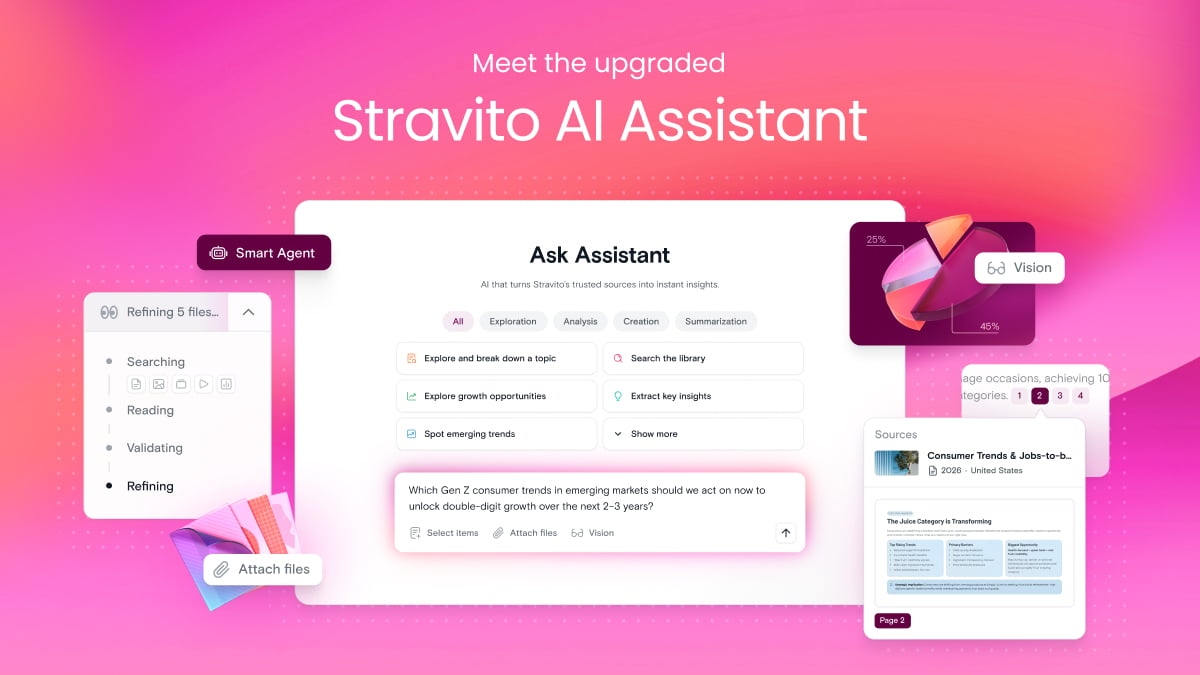

We built our Assistant with a simple principle: ground every answer in customers’ actual research. While our AI model brings language understanding from its training, we constrain its responses to information found in customers’ uploaded content. Every answer links directly to source materials so users can verify claims instantly.

The key is architectural discipline. We don't ask the AI to generate insights, we ask it to find and synthesize existing ones from your knowledge base. When relevant information isn't available in customers’ content, the Assistant says so rather than filling gaps with plausible-sounding speculation. This isn't about eliminating the model's training data; it's about ensuring business decisions are based on actual research, not statistical probabilities.

What are the most important safeguards that prevent hallucinations in an LLM system?

First, ensure AI only pulls from relevant sources. When AI retrieves the wrong documents to answer a question, it starts connecting dots that shouldn't be connected. It’s like citing your pricing research to answer questions about brand perception.

Second, train AI to say "I don't know." Many systems try to be helpful by guessing when they should simply point to gaps in your knowledge base. Better to admit limitations than invent insights.

Third, verify what matters. Most companies check if users are happy with AI responses, but it’s even more important to check if those responses are actually true. You need someone spotting when AI claims "customers prefer blue packaging" but your research never studied color preferences.

What's the biggest misconception companies have about using LLMs safely?

That bigger models mean fewer hallucinations. In reality, more advanced models can sometimes produce more convincing fabrications. The key misconception is treating AI outputs as authoritative without appropriate verification systems. Safety comes from how you architect the entire system, not just model selection.

What safeguards set Stravito apart from other AI-powered platforms?

Our domain-specific approach to insights management gives us an advantage. We're not trying to build general-purpose AI but rather specialized tools for insights professionals. Our platform was designed from the ground up to maintain knowledge integrity, with AI as an extension rather than a replacement. We prioritize traceability - showing not just answers but exactly which research supports each conclusion. Our evaluation suite is adapted for the specific use cases and content companies come to Stravito for.

On Business Impact

Why should business leaders, especially in marketing and insights, care about LLM safety?

Market insights drive million-dollar decisions. The cost of acting on hallucinated insights isn't just wasted resources, it's missed opportunities and potential market failures. For insights teams specifically, credibility is currency. When an insights professional presents findings to leadership, there can't be any question about whether those insights are real or fabricated.

Advice

If you had to give one piece of advice to a company implementing genAI, what would it be?

For companies: Start with a clear, specific, and high-value use case where the information sources are well-defined and can be controlled. Prioritize grounding your LLM in your own trusted data from day one. Don't try to boil the ocean. Instead, achieve an early win with a system that provides verifiable, accurate results, building trust and experience before expanding.

How should businesses balance innovation with responsibility when using LLMs?

Implement a staged approach where you expand use cases as safety mechanisms mature. Focus first on augmenting human capabilities rather than replacing human judgment. The companies that will succeed with AI aren't those who move fastest, but those who build sustainable systems where AI and human expertise complement each other.

TL;DR summary:

Large language models (LLMs) can "hallucinate"—generate convincing but false information—because they predict patterns rather than retrieve facts. This is especially risky in business contexts where trust and accuracy matter most.

In this Q&A, Stravito’s CTO explains the difference between creative outputs (like brainstorming) and harmful hallucinations (like fabricated customer quotes or market data). The key to safety: Grounding AI responses in your company’s verified research, not generic training data.

At Stravito, our AI Assistant is designed to synthesize (not invent) insights. Every answer links back to source documents so users can fact-check instantly. We believe the safest AI isn’t the biggest or newest model; it’s the one built with the right guardrails, for the right use case.

Key takeaways:

- Use AI only where it can reference trusted, controlled sources.

- Teach AI to say “I don’t know” when data is missing.

- Prioritize traceability and truth over fluency and speed.

- Don’t chase innovation at the expense of credibility.

When it comes to insights, reality always beats plausibility.